Jan 24, 2026

Using LLMs for document OCR: What you need to know

Using LLMs for document OCR: What you need to know

Ståle Zerener

If you’ve ever tried to pull structured information out of a PDF or scanned document—like totals from invoices or names from contracts—you’ve likely run into OCR tools. Traditional OCR can copy text from images, but it doesn’t understand what that text means.

That’s where large language models (LLMs) come in. Instead of just reading characters and words, they interpret text in context—understanding relationships, labels, and meaning.

LLM OCR combines traditional Optical Character Recognition (OCR) with large language models, allowing systems to both read text from documents and understand it semantically. This makes it possible to extract structured data from complex layouts and unlock new automation workflows, especially for no-code and low-code use cases.

In this post, we’ll walk through what LLM OCR is, how it differs from traditional OCR, and when it makes sense to use it.

OCR vs LLM-based OCR

Traditional OCR works like a scanner with copy-paste: it finds text on a page and spits it out.

LLM-based OCR works more like an assistant. You can ask it, “What’s the total on this invoice?” or “Who is the sender of this letter?”, and it will find and return that specific info - it understands context and semantic. In most benchmarks, traditional OCRs are generally better than LLMs at reading text accurately from images, but the LLMs understand semantics - they understand the dfiference between a total amount and a tax amount.

Feature | Traditional OCR | LLM-based OCR |

|---|---|---|

Text extraction | ✔️ | ✔️ |

Context understanding | ❌ | ✔️ |

Semantic understanding | ❌ | ✔️ |

Coordinates / precise location | ✔️ | ❌ |

Confidence scores | ✔️ (On word or character-basis) | Usually no |

Structured output | ✔️ | Usually no |

Hallucinations, when LLMs start guessing

LLMs are good at guessing. Sometimes too good. If part of a document is blurry or missing, a language model is prone to “fill in the blanks” with what should be there. This is called hallucination, and what makes it dangerous is that it is often very subtle:

A total that looks plausible, but is wrong.

A missing invoice number that gets made up.

A date that gets reformatted incorrectly.

The problem? These mistakes often look correct. If you’re using the output in an automation, you may not notice until something breaks or until incorrect data gets stored. Unlike traditional OCR tools, LLMs don’t give you a confidence score out of the box. So how can you tell if the output is reliable? Here’s the trick: you can infer confidence based on how the model chooses its words, even if it doesn’t say so directly. For example:

If the model quickly picked a clear answer (e.g. “Total: $530.20”), it was likely more confident.

If it hesitated or flipped between options internally (e.g. “Is that an I or a 1?”), it was less sure.

Some tools look at how certain the model was when choosing each word and turn that into a confidence estimate, usually a percentage per field. These aren’t perfect, but they help you decide when a human should double-check. In practice, you don’t need to understand how the AI works under the hood, just know that:

✅ Higher certainty = safe to automate

⚠️ Lower certainty = good candidate for manual review

Humans-in-the-loop to the rescue

You don’t want to manually check every result. After all, that kills the benefit of automation. On the other hand, you don't want to lose control either, especially when dealing with business critical documents.

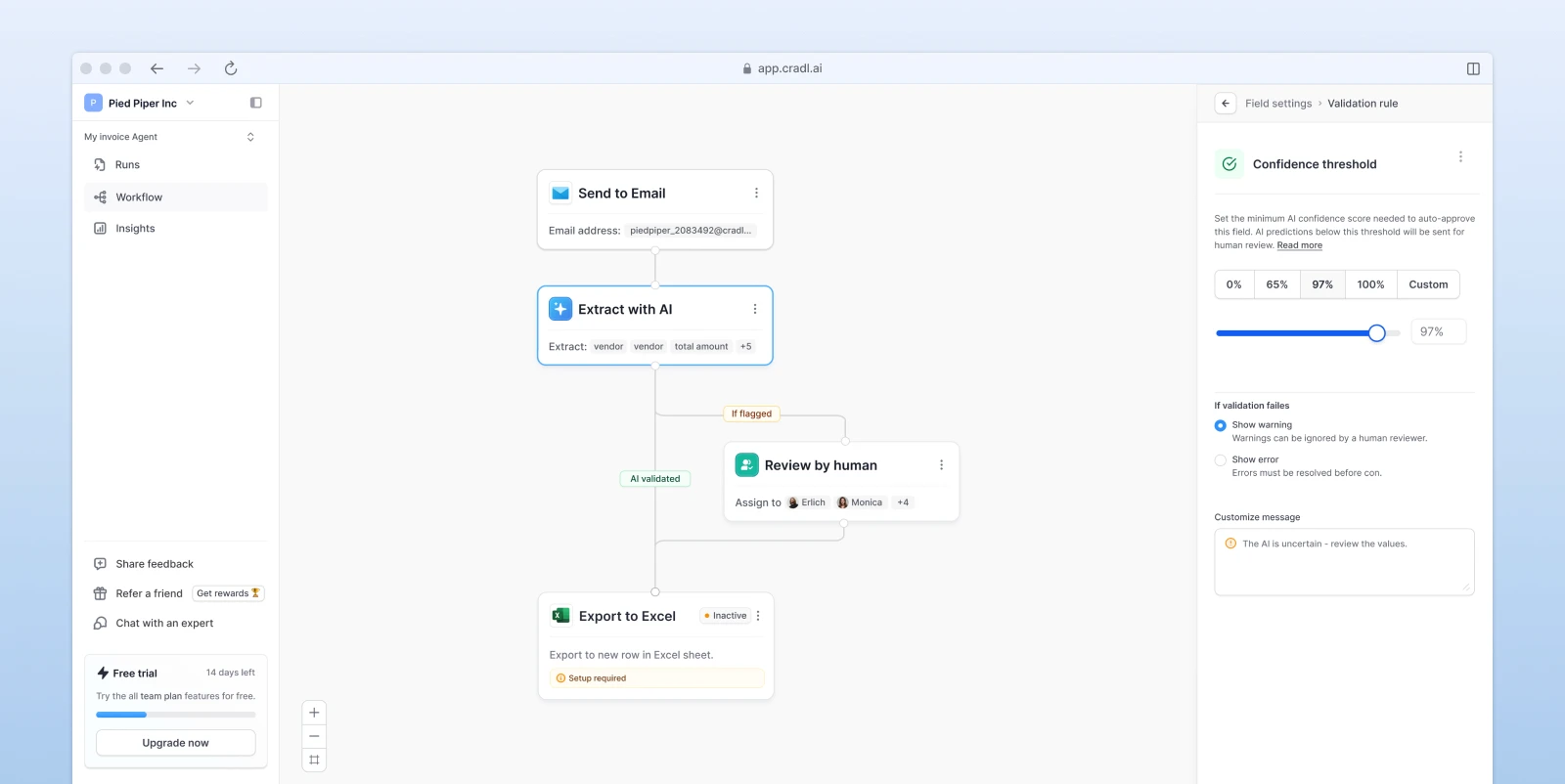

Instead, use a human-in-the-loop approach:

Let the model handle everything by default.

Flag fields it’s unsure about.

Only review those specific pieces.

This way, 90% of your documents flow through untouched. Humans only check what really needs checking.

This setup works especially well in environments when:

You’re working with sensitive data (e.g. billing, identity, legal).

You need to build trust with stakeholders.

You want to scale confidently without hiring a review team for every doc.

Why ChatGPT is not a document workflow tool

It’s tempting to use tools like ChatGPT, Claude, or Gemini directly for document tasks. You upload a PDF, ask a question, and get a seemingly accurate answer. It feels like magic and it works really well for one-off tasks. But there’s an important distinction. These chat-based models are great for exploration and prototyping, but not for running production pipelines.

Here’s what they are good for:

Trying things out quickly

Extracting ad hoc insights or text

Understanding text in context

But they don’t give you:

Consistent, structured output (like clean JSON)

Confidence scores or uncertainty signals

Integration with automation tools or databases

Error handling or fallbacks

The ability to train or fine-tune the model on your specific document types or field definitions

In short: chat-based tools are flexible and powerful, but they aren’t built for repeatable, scalable workflows. If you’re building something serious - especially with financial, legal, or compliance needs -you’ll want something more robust and tailored.

What to look for in a tool

If you're choosing a platform for document extraction, look for one that:

Supports LLM-based extraction, not just OCR

Gives you confidence estimates per field

Lets you flag and correct uncertain fields, ideally with feedback loops

Plays well with the rest of your stack (like Zapier, Make, or webhooks)

Some tools (like Cradl.ai) offer this out of the box: LLM-powered extraction, built-in review workflows, and integrations that let you automate from end to end.

Final thoughts

LLM-based OCR is a breakthrough for no-code and citizen developers. You can finally build flows that understand documents — not just read them.

But understanding doesn’t mean infallibility.

Use confidence scoring, review uncertain cases, and let automation handle the rest. That’s how you move fast without breaking things.